Artwork by Jaiden Saberin (hand-drawn on whiteboard, AI-enhanced)

From Features to Agents: Building a Maturity Model for AI at Audition AI

In the fast-moving world of AI, building something that works is only the beginning. The real challenge is building something that works reliably, serves real business needs, and evolves gracefully over time.

At Audition AI, we've been thinking deeply about how to measure the maturity of our features — and more specifically, our AI agents — in a way that's consistent, actionable, and aligned with the needs of our customers.

The key to delivering an exceptional user experience with any product or service is setting and managing expectations — early and often. In Generative AI, this is even more critical. The technology is still in a relatively nascent stage, and many clients come to the table without a clear grounding in what it can and can't do. As a result, expectations can be unrealistic before the first demo.

Our maturity model helps address this head-on. It gives us a shared language to explain where a feature or agent is in its journey — and what that means for outcomes. Clients shouldn't expect a Level 0 feature to be flawless; it may need guidance and iteration to reach the desired result. By making these stages visible, we can provide transparency from the first steps to becoming a trusted partner — tying directly to our belief that the most valuable AI agent is the one you can rely on not just today, but for the long run.

Borrowing from Proven Frameworks

We're not starting from scratch here.

Industries from software engineering to aerospace have long used maturity models to measure readiness and capability. The Capability Maturity Model (CMM), Technology Readiness Levels (TRLs), and SaaS lifecycle frameworks all share a common idea:

Capabilities evolve through identifiable stages, from an initial proof of concept to a highly optimized, strategic asset.

We've adapted that thinking for an AI platform context — where "features" might mean connectors, workflows, analytics modules, or autonomous agents — and mapped them into four clear levels of maturity.

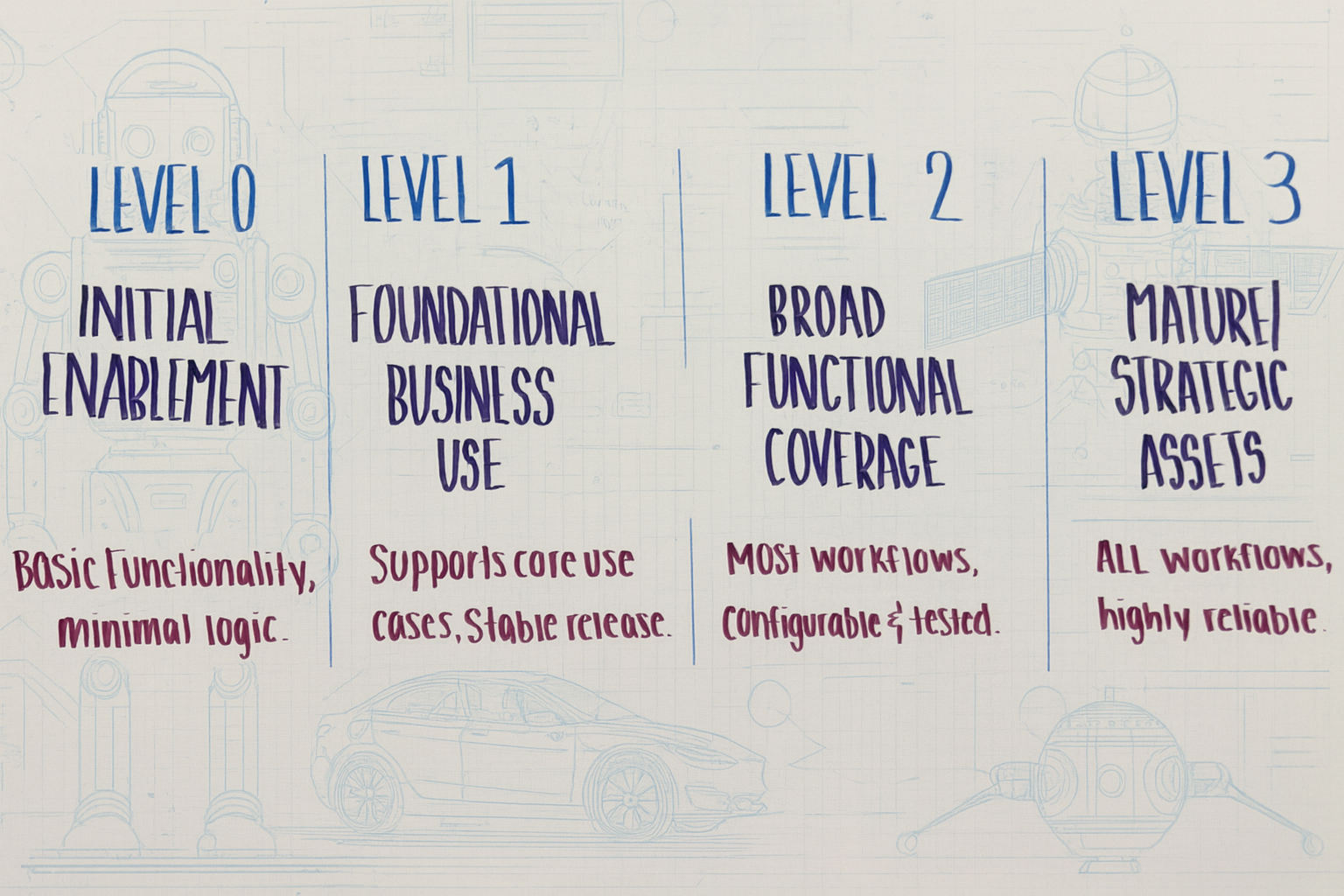

The Four Levels of Maturity

We use a four-level model across all features and agents. It's simple enough for teams to apply consistently but detailed enough to guide roadmap decisions.

Level 0 – Initial Enablement

Technically functional. Wired to the right APIs, passes basic tests, performs a limited set of actions. Documentation is minimal; usually in early adopter hands.

Level 1 – Foundational Business Use

Supports a baseline number of business use cases (often 3–5). Error handling and logging in place, user-facing documentation exists, stable enough for general release.

Level 2 – Broad Functional Coverage

Expanded capabilities to cover most workflows. Configurable for different customer needs, tested against edge cases, integrated into onboarding/training. Maintained with regular improvements.

Level 3 – Mature / Strategic Asset

This is the gold standard. Supports nearly all relevant workflows, meets performance/reliability benchmarks, fully documented, customer issues are rare. Changes only for strategic reasons.

Artwork by Jaiden Saberin (hand-drawn on whiteboard, AI-enhanced)

Why Maturity Matters for AI Agents

The maturity of an AI agent isn't just about how smart it is — it's about how useful, reliable, and integrated it is.

- A Level 0 agent might impress in a demo but fail in real-world complexity.

- A Level 1 agent is a helpful assistant for specific tasks.

- A Level 2 agent starts becoming indispensable — handling a variety of tasks with confidence.

- A Level 3 agent becomes part of the fabric of operations, so reliable that removing it would cause disruption.

By applying this maturity model, we can:

- Prioritize development efforts based on where agents sit today.

- Avoid over-investing in already mature capabilities unless strategically necessary.

- Give customers a transparent view of what they can expect from each agent.

Graduating Agents from One Level to the Next

Moving from one level to the next requires clear milestones:

- Level 0 → Level 1: Add real business use cases, basic error handling, customer-facing documentation.

- Level 1 → Level 2: Expand use cases, add customization, integrate into onboarding and training, handle edge cases.

- Level 2 → Level 3: Achieve near-perfect coverage, optimize for performance and reliability, document fully, and maintain with minimal intervention.

For agents, this might look like:

- Learning to handle multiple workflows.

- Improving resilience to unexpected inputs.

- Integrating with other systems and data sources.

- Achieving a level of trust that they can operate autonomously without constant oversight.

The Bigger Picture: Maturity as a Strategic Lens

As AI platforms mature, so too must the frameworks we use to measure them. In the early days, we celebrated when an agent could "do the thing." Now, we measure how well it does the thing in the messy, unpredictable real world — and how much value it adds over time.

By adapting proven maturity models for AI, we're creating a common language for our teams and customers. It helps us make smarter roadmap decisions, communicate clearly about capabilities, and ensure that our agents don't just get smarter — they get better.

Maturity in the Age of AI Platforms

For AI platforms, agent maturity has an extra dimension: learning and adaptation. A traditional software feature is static until updated by developers. An AI agent can evolve based on:

- New data sources

- Updated models

- Emerging workflows

- Changing customer needs

That makes maturity both a moving target and a discipline. We must build agents that are not only capable today but designed to adapt without losing reliability.

Looking Ahead

At Audition AI, our maturity model gives us a shared language for product decisions:

- Engineers know what's required to move an agent to the next level.

- Product managers can prioritize based on maturity gaps.

- Sales and customer success teams can set realistic expectations.

In the future, this framework could help customers evaluate agents directly:

"This agent is Level 3 — a proven, strategic asset for mission-critical workflows."

vs.

"This agent is Level 1 — valuable for specific tasks, but still evolving."

Transparency builds trust and helps customers plan adoption strategically.

Final Thought

In AI, maturity isn't just about intelligence — it's about dependability, adaptability, and strategic value. Our four-level model gives us a way to track that journey, from first steps to trusted partner. Because at the end of the day, the most valuable AI agent is the one you can rely on — not just today, but for the long run.

Want to dig deeper into this framework? We've published a comprehensive AI Maturity Model resource that breaks down each level with actionable guidance, graduation criteria, and how to advance your organization from L0 to L3.

Like this content?

Subscribe to our weekly brief for more insights on AI maturity and enterprise AI strategy

Subscribe to Weekly BriefReady to Build Mature AI Agents for Your Organization?

Discover how Audition AI's proven maturity framework can help you deploy reliable, strategic AI capabilities.